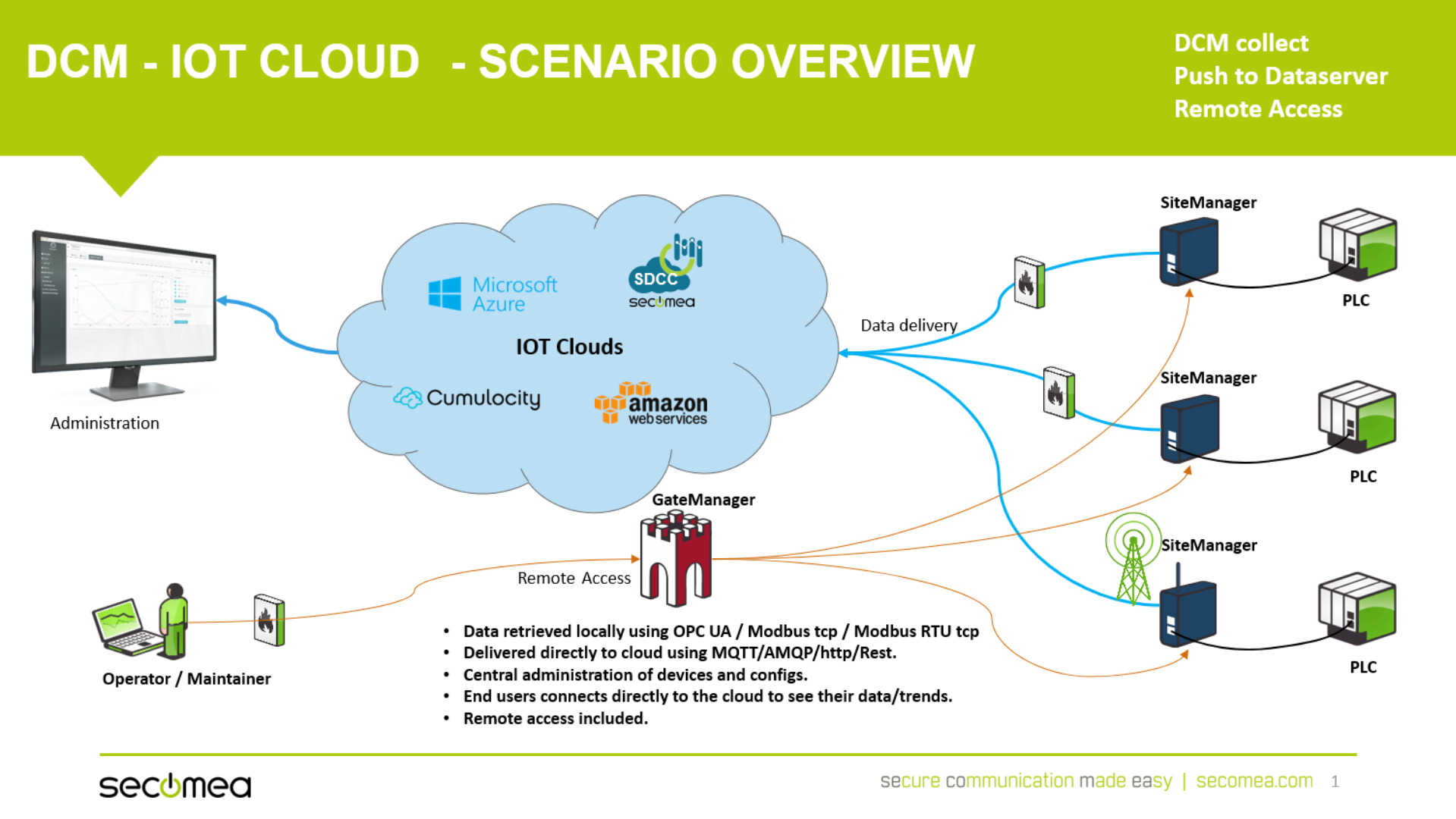

The purpose of this article is to introduce you to our active data collection solution.

Prerequisite

A Starter package (SiteManager + accounts)- A cloud subscription (Trial) from:

- * Inuatek,

- * Amazon IoT core,

- * Cumulocity IoT,

- * Microsoft Azure IoT hub,

- * Aveva Insight

Data collection is an investment in getting valuable data for long-term analysis to be able to see tendencies and perform preventive and predictive maintenance based on actual data instead of approximated or calculated data.

The Secomea data collection solution is an edge gateway solution. Collecting directly from end devices as PLC's or other devices capable of presenting data, via industrial protocols like Modbus or OPC UA.

Therefore, this is NOT to be confused with the SCADA system, neither in communication nor in speed.

This solution enables you to collect data in a cost-effective, secure, and easy way.

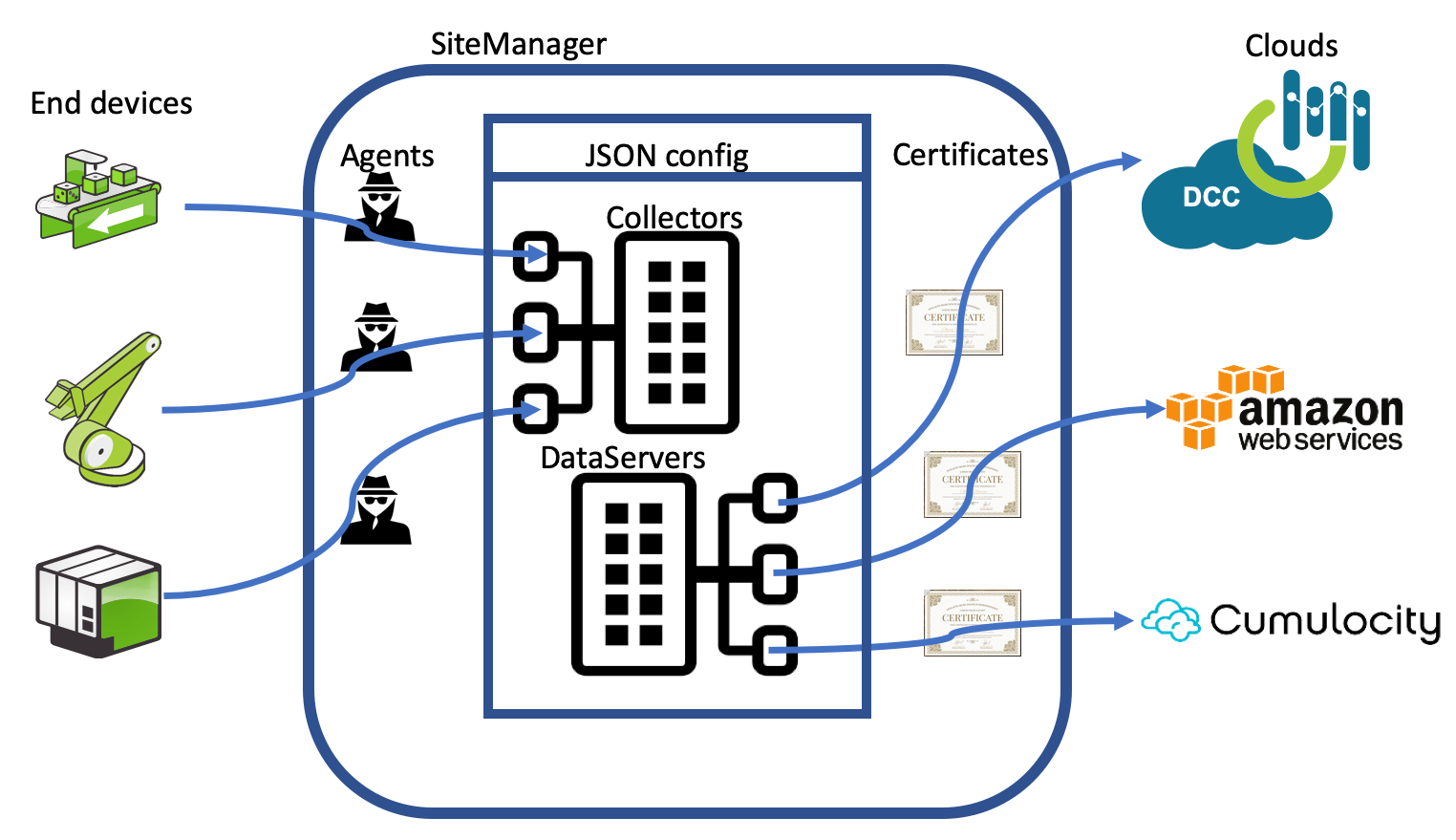

In Secomea data collection "Agents" define which end-device to collect from, the agent also holds the "CollectorName" which links the Agent to a specific section of the json formatted config file.

In the Config file's DataServer section, the certificates, private key, and connection strings stored in the Certificates storage are linked by the names under which they are stored.

Please select the guide with your chosen cloud:

Getting started with DCM - Schneider Electric EcoStruxture (Machine Advisor)

Read more

The 3 SiteManager SW component types in the design are:

The Collector : This component is responsible for collecting data from devices on the site, using some standard protocol: OPC-UA, Modbus, HTTP GET or Rockwell (Note: The Rockwell collector has currently some limitations). The data are delivered to The Aggregator. There may be multiple incarnations of these servers running at any given time according to the configuration.

The Store-and-Forward DB : This component is responsible for aggregating collected data and storing them in a store-and-forward database. It will be able to perform simple statistics like Average, Max and Min values, and complex arithmetic calculations on multiple sample values from multiple collectors.

The DataServer : This component is responsible for delivering data from the store-and-forward database to Cloud Services via some SDK protocol: Amazon AWS IoT, Azure IoTHUB, Cumulocity SmartREST, EcoXtructure Machine Advisor REST or SDCC SPD via the Secomea Secure infrastructure. There may be multiple incarnations of these servers at any given time according to the configuration.

Addressing

In order to identify and store the data collected correctly, the addressing of data needs to be very precise:

- Every SiteManager is identified by it's EdgeID.

- Every device queried is identified by the collector collector name. (In the configuration file).

- Every sample collected is identified by the collector sample name. (In the configuration file).

- Every aggregation is a new sample created from other sample values and are therefore identified the same way as a normal sample.

- Every sample value is identified by (Timestamp, EdgeID, collectorname, samplename).

The EdgeID uniquely identifies a SiteManager and consists of the official DNS name of the GataManager it's assigned to, and an appliance ID assigned to it by the GateManager.

By NOT using the MAC address of the SiteManager, we allow it to be replaced without changing ID towards the supported Cloud Services.

From this follow some rules for the configuration file:

- All collector names within a configuration must be unique.

- Sample names within one collector must be unique.

- "Names" in DCM must start with a letter. It cannot contain whitespaces nor special characters.

It is envisioned, but not mandated, that all DCM entities (SiteManagers) share the same configuration, so the collector configuration is more like a type of collector, that may be present at (at least) one site.

The Collector

Each collector should have the following parameters:

- Collector Name

- Default Device address (IP-address+port), which can be overruled by local agent configuration.

- Collection protocol used to acquire data from device.

- Protocol specific Access parameters for acquisition of data.

- List of sample values to be acquired:

- Sample Name

- Sample address. Depends on protocol used.

- Sample data type. 8, 16, 32 and 64 bit signed and unsigned integers. 32 or 64 bit floating point. String (max 24K) or raw data (max 24K).

- Sample frequency. Depends on protocol used.

- Number of aggregated values to keep in store-and-forward database.

The Store-and-Forward DB

The main purpose of the Store-and-Forward DB is to handle failures in connections to sampled devices and to cloud systems. It provides buffering and an aggregation engine.

Aggregations are a special kind of sample values, where data is not acquired, but aggregated from other sample values. The following parameters are needed:

The DataServer

- Protocol

- Interval between data delivery to the Cloud Services.

- Protocol parameters for delivery.

Alarms

Alarm are unusual events being recorded by the DCM system. They are stored in the Store-and-Forward Database like any other sample value under the name "dcm_alarm". They will therefore also be available in any cloud service that needs them. The number of alarms saved in the Store-and-Forward Database is configurable. Some cloud services have an alarm concept that matches DCM's concept (Cumulocity and DCC) and the DCM alarm will be reported as alarm to said cloud systems. For other cloud systems, the alarms will be reported as data for a sample called dcm_alarm:dcm_alarm.

Timestamps

All sample values are stored as time-series, which means that every value also has a timestamp associated with it. In DCM, a timestamp is a 64bit signed integer representing the offset since Jan 1st, 1601 UTC in 100ns units. This is the same representation as the OPC-UA protocol uses and comes from Windows NTFS file timestamps (see File Times). It is converted by the different dataservers into whatever format the cloud systems needs.

Since the these timestamps are in "wall time", DCM expects NTP synchronized wall clock before it will allow any collector to sample values and thus timestamps.

Normally, time-series are in increasing (or same) timestamp values, however, alarms cannot always be guarantied to be ordered correctly, as many parts of the DCM system can generate alarms at the same time.

The DCM Certificate/Key Store

All of the cloud systems, that the DataServers communicate with, secure this communication using certificates/keys. The DCM system has a Certificate/Key store, where these are saved with a name and a type. They can then later be referred to by the DCM configuration by this name. In some instances, the configuration doesn't even need to specify the name as the type is implicit. These Certificate/Key names are called cnames.